Technical

An experiment in mixed reality and gesture recognition

IE Digital’s newest recruit, Andreea Blaga, joins us fresh from university, where she clearly made an impact. Her final year dissertation, looking at gesture recognition, won the Inspirational Innovation Award at Birmingham City University’s (BCU’s) Innovation Fest 2017.

So, we asked Andreea to explain a bit about this fascinating piece of work...

In the modern world, human computer interaction (HCI) technologies are building a richer bridge between humans and computers (Bhatt et. al, 2011). Until relatively recently, this has been via mechanical devices such as mouse, keyboard, and gamepads.

As technology advances, we’re increasingly seeing equipment being developed that allows the user to interact with the computer via hand or body gestures. Such equipment allows us to harness one of the most common ways of everyday communication amongst humans; for example, most children express themselves using gestures before they learn how to talk. The main goal for the evolution of HCI technology is to achieve this same kind of intuitive interaction between humans and computers.

Ideally, for the most natural interactions, gesture recognition devices would replace the mechanical ones. Experts are divided over how quickly this might become a reality: while some state that the mouse will soon be out of trend (Kabdwal, 2013), others claim that it may take a while until applications will make good use of this type of device, similar to the case of computer mouse and touch screen before (Vogel and Sharples, 2013). This delay might be due to several usability issues that need to be fixed before we can achieve a truly intuitive, natural interaction. “A massive effort is perhaps needed before one can build practical multimodal HCI systems approaching the naturalness of human–human communication.” (Bhatt et. al, 2011)

The research project

Various devices that recognise motion have emerged over the past few years, such as Microsoft Kinect (2010) and Myo Armband (2013). For my final year project, I focused on the Leap Motion Controller, shown below, which is a computer hardware sensor device that supports hand and finger motion as inputs, analogous to a mouse, requiring no hand contact or touching. It recognises motion using two cameras.

Since the most important aspect of an HCI system is the ability of users to adapt to using it, I chose to explore the usability of the sensor. I developed a 3D interactive system in a mixed reality (MR) environment: where physical and digital objects co-exist and interact in real time. The Leap Motion API provided the mixed reality environment, with the rest of the system developed using Unity and C#.

I then used the system to test the usability of the Leap Motion Controller as an input device, through a number of scenarios.

Implementing the gesture recognition experiment

As part of system initialisation, users had to register by inputting their ID, age and gender. This also served the purpose of making sure everything was working correctly.

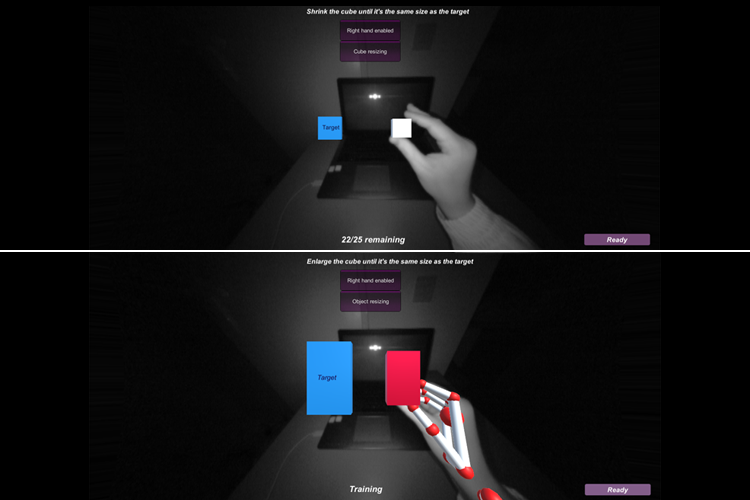

The test subjects then had five different interaction tasks to complete. Each task featured two 3D objects in the MR environment, an “interaction cube” and a “target cube”, which shows the position, rotation and size that the interaction cube should have at the end of the task. Each interaction task was based on a ‘pinch’ gesture, and they were delivered in random order, five times per task for each subject, and timed.

- Move the interaction cube to the position of the target cube

- Enlarge the interaction cube to match the size of the target cube

- Shrink the interaction cube to match the target

- Rotate in 2D – rotate the interaction cube on the z-axis

- Rotate in 3D – rotate the interaction cube on the y-axis

For each task, the most important factor is ensuring the sensor can recognise the hand. This works by calculating a number of distances between the participant’s hand and the virtual 3D object.

In order to interact with the 3D object, the user has to both “touch” the object and perform the correct gestures. Distances were calculated between the thumb and index finger, as well as the position/rotation of the thumb, index and palm joints. The distance from the boundary of the cube to the hand was also calculated for each frame, and the midpoint of the pinch was also measured in all tasks.

The experiment investigated two different conditions as shown below, one where the user could only see their real hand (“No Hand” – top picture), as captured by the Leap Motion’s cameras, and one where a 3D modelled hand was overlaid on the user’s hand in the MR environment (“Hand” – bottom picture).

As well as the various distance measurements derived from the sensor, and the time taken to complete each task, I collected feedback data from participants regarding the perceived usability of the controller, through a series of questionnaires.

The study and results on usability

Following a smaller pilot study involving experts from my department to identify any possible improvements, the main study involved 30 participants (all right-handed in this instance) from BCU who were unfamiliar with the system.

Of the five different tasks, participants showed a strong preference for the Move task, both with and without the hand overlay. The data revealed that the participants from the “no hand” condition were much slower in interacting with the object than the participants from the “hand” condition. This suggests that the additional feedback that the graphical hand provides on the position of the hand and cube makes a big difference to the usability of the sensor.

The hand overlay also measurably improved the accuracy of the Rotate in 2D task, although there was no significant difference for the Rotate in 3D task, which was also the quickest task to perform overall.

The Enlarge task was perceived to be more user-friendly for participants from the “no hand” condition, although the measured data showed no significant difference in the time taken.

Overall, the usability of the system was good, with a System Usability Scale (SUS) score of over 68 for both conditions. Most of the users reported that the Leap Motion Controller was fun and easy to use compared with classic input devices, but that they would not use it as a replacement for mouse and keyboard. However, most agreed that with several improvements, the Leap Motion Controller could be a very useful tool in the future.

Gesture recognition might be the future of human computer interaction (Bhatt et. al, 2011), and has huge potential for use in a wide range of disciplines – from research experiments and prototypes, to day-to-day commercial products in areas like entertainment, consumer electronics, automotive, healthcare and education.

With continued research and investment in the technology, it will be exciting to see how HCI develops in the next few years, and what uses we can find for it.

The main goal for the evolution of HCI technology is to achieve this same kind of intuitive interaction between humans and computers.

Andreea Blaga

Web Developer, IE Digital